Over the Christmas holidays, I somewhat fell into a rabbit hole. It started off by Ubiquiti announcing the Unifi Express and I thought: “Hey, I could use that and replace my Amplifi HD router”. Reality already was a bit more complicated, in fact the Amplifi HD is just my apartment router and Wifi AP, while in the basement I use a EdgeRouter X to separate networks for the two apartments in my house.

Networking rabbit hole

Unfortunately (or “Fortunately”, however you see it), the Unifi Express was not ready for shipping, so I was not able to buy it. With that, I read up on some details about the Unifi Ecosystem and the different hardware available, decided to “dream big”, and bought a Dream Machine Pro and a U6+ for Wifi.

After running this setup for a few days and discovering some of the potential, I recognized that I was doing it wrong for years: The ability to segment my network with VLANs and also partition my devices on Wifi the same way, the ability to have (relatively inexpensive) managed switches that I can configure from a central UI, the simple way of setting up a Firewall (at least with the more recent firmware) and the reliability and performance are way beyond what I ever experienced even in more high-end consumer devices.

I quickly added a Keystone Patch Panel (also from Ubiquiti since it looked so nice alongside the UDM) and a Flex Mini switch for my office.

Services rabbit hole

Now, having a relatively elaborate network setup and then only having client machines makes it hard to come up with a reason to partition a network relatively strict - so: What could I host locally besides my tried-and-tested TrueNAS Scale for media, local backups and document sharing? Well, if you ever had a look around in the blog, you probably already recognized that I’m into self-hosting. Due to my limited network and system infrastructure however, I never thought about running much of that stuff at home because then I would need to invest much more in backup strategies etc.

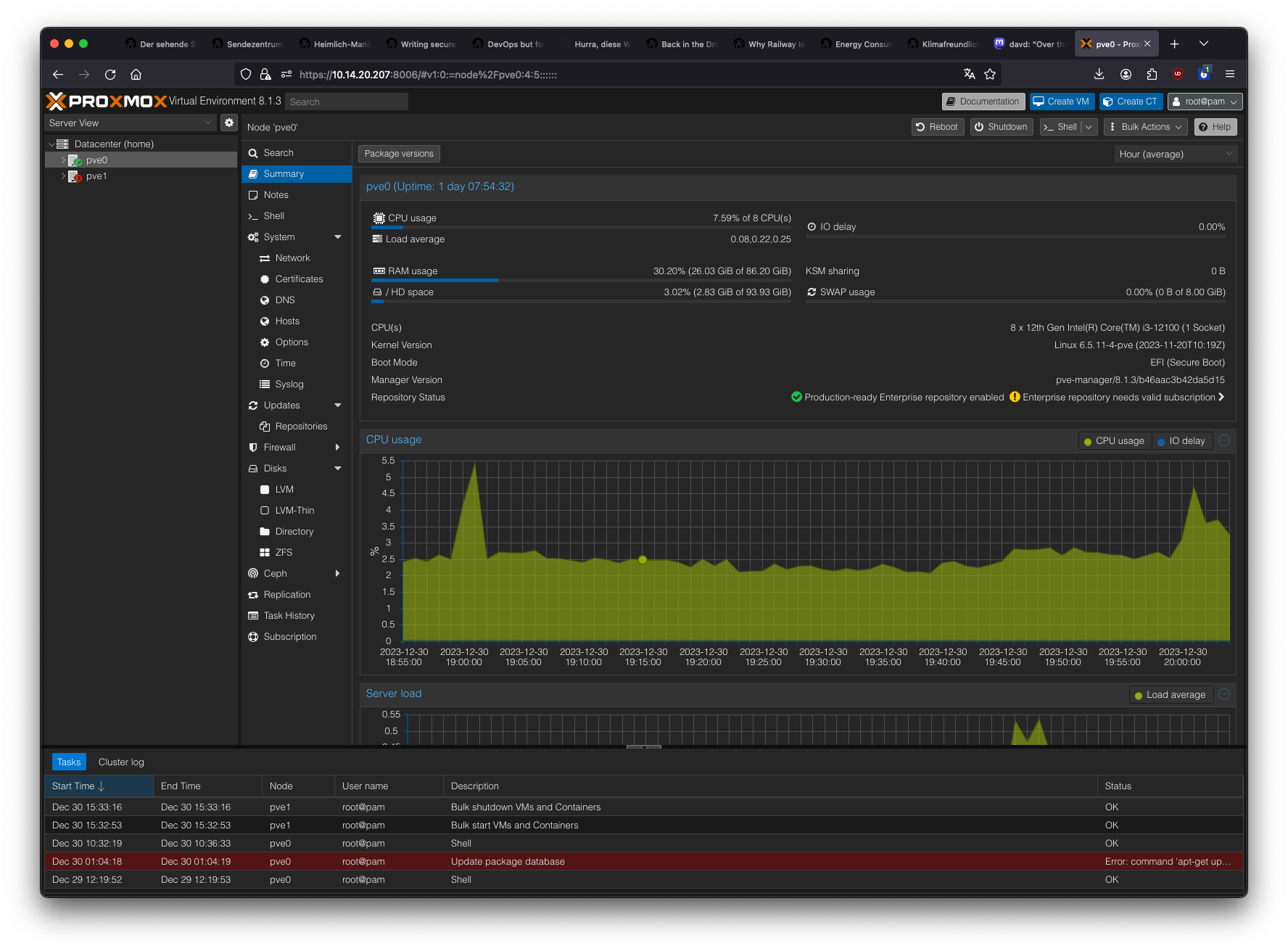

Since I had a spare system with a relatively recent i3 laying around, I gave Proxmox a try, after probably 5 years of not thinking about it. Turns out: If you have a hypervisor set up, you start creating VMs pretty fast - unless your system only has 16GB of memory ;)

So, I moved my Home Assistant installation from my Raspberry Pi to a VM, as well as FreshRSS and Immich, the latter two ran on a Cloud VPS until then. Of course, when you have some services running, you need some more support services. I moved all the VM storage from my local system to my TrueNAS box (shared over NFS since I had some problems with iSCSI on the Proxmox side of things), set up a ZFS snapshot-based backup with an old Dell Optiplex 7010 from 10 years ago. This, of course, requires some monitoring, so I also looked into Uptime Kuma. Also added some off-site backup stuff for the most critical data. As it turns out, having centralized storage makes backing up things very manageable. No VM, not even the Hypervisor need to know about anything. I just snapshot my ZFS dataset that’s shared with the Hypervisor and replicate it with zfs send and zfs recv to another box. That’s it.

To make things nice, of course, we need a Reverse Proxy with proper TLS, so I set up a separate VM with Caddy which grabs a wildcard cert from Let’s Encrypt using DNS-01, allowing me to still not expose any ports publicly.

So far so good, but now I invested so much in support services, so I want to utilize those three systems to their fullest potential.

Hardware rabbit hole

It’s too long to describe, and, I think by now you recognize a pattern here. This will never stop. So:

- Upgraded my old 3rd gen i3-based TrueNAS to a Ryzen 5600 with 32GB ECC RAM (to get error correction on the storage side at least)

- Added more RAM to my Proxmox system with mismatched memory modules, totaling around 86GB now

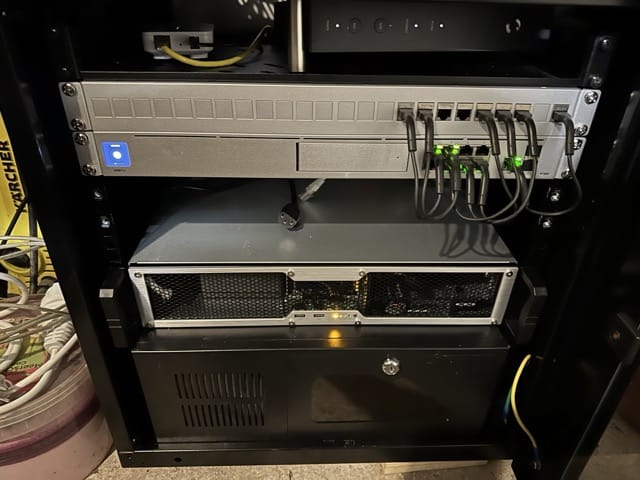

- Recognized I cannot fit all those boxes in my network cabinet, so I ordered a Chenbro RM24100 2U short-depth chassis and moved my Proxmox box there

- Added some more VMs, including some internal services for my small business, which enabled me to save some money on Hetzner Cloud, so I needed a bit more memory again

- Some of my apps run on arm64 in Hetzner Cloud and

docker buildxfor arm64 on my machine is so slow, that I cannot reasonably use my local infrastructure to build those images, so now I’m looking for an affordable, short-depth 1u ARM server that can be utilized as a GitLab runner (is there Proxmox for arm64?) - I’d need another switch and two APs

I guess that’s it. Much stuff for basically 14 days, but I’ve learned so much, and I really like the setup so far. Even with the high utility cost in Germany, with the money I save every month in Cloud Services, I think the upfront cost will pay off within the next 1-2 years. And in the end, it’s about the fun and learning experience, isn’t it?